Inspired by Google’s inceptionism art, my colleagues and I have created an interactive visualization of a hallucinating neural network. You can find it on Twitch at http://www.twitch.tv/317070.

It has been running there for a week now, and in this blogpost I want to discuss some of the results.

The source code

A request we often got, was: “Can I have the source code?” Sure you can, here you go. We used Python, Theano and Lasagne to implement everything. Feel free to experiment with the code and create you own interactive fractals.

A number of things we did:

- We used a Student-t distribution as a prior on all patches of 8x8x3 pixels.

- Using this prior on multiple scales. This helped big objects not break down into smaller objects.

- Blurring between optimization steps is important (see: this article). In our case, this is given for free by zooming in on the picture, which has an interpolation step.

- We optimized the logits of the network, not the softmax (as that seemed to cause numerical instability).

- For aiming at multiple features, we implemented the dense layers of VGG-16 as convolutional layers. Since our images are bigger than the ones VGG-16 was designed for, the output of the network has a little bit of information of where the objects are located in the image. Using this information, we aimed the top half at the first subject, the bottom half at the second. This way, approximately the bottom half of the image would optimize towards the first subject, and the upper half towards the second.

- The network only generates a new frame every 7-8 seconds. We interpolated between them to achieve a smooth framerate.

The people

Overall, I have receive almost only positive comments. Most surprising to me, was that a lot of the response came from the art world, not only from the machine learning community. I have received offers to put this in art expositions, which raises some fundemental questions. Am I still the artist, because I could never even imagine the stuff that comes out of the algorithm? Is it still ‘valuable’, as it can be created and recreated in realtime to infinity? Or is it exactly this ephemerality, this infinite cornucopia of visually rich images, which makes it interesting? What will the future bring, as the code has been hacked together in the course of only 10 days?

By the way, another group we saw a lot on the stream, were people doing drugs. I can’t imagine why.

The images

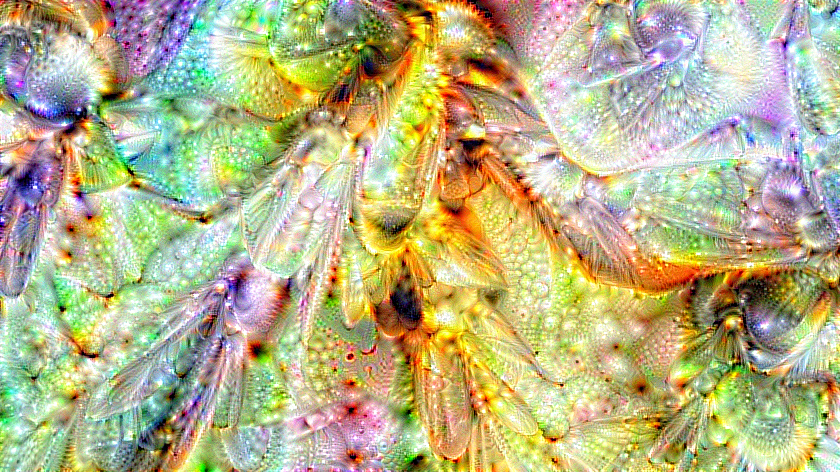

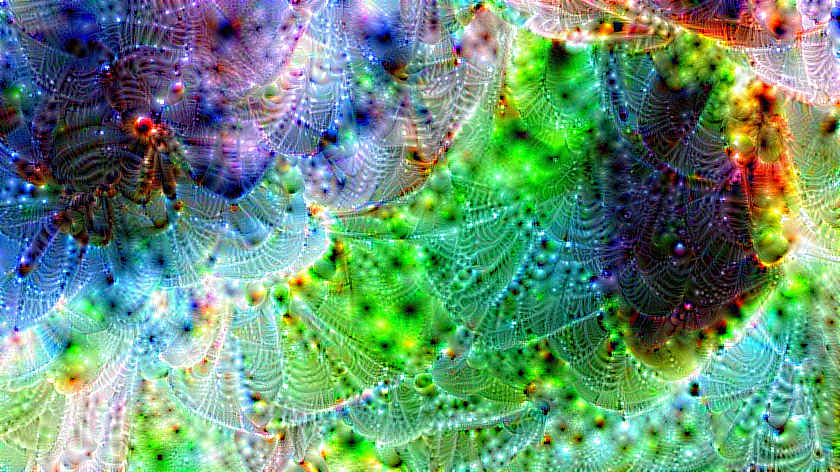

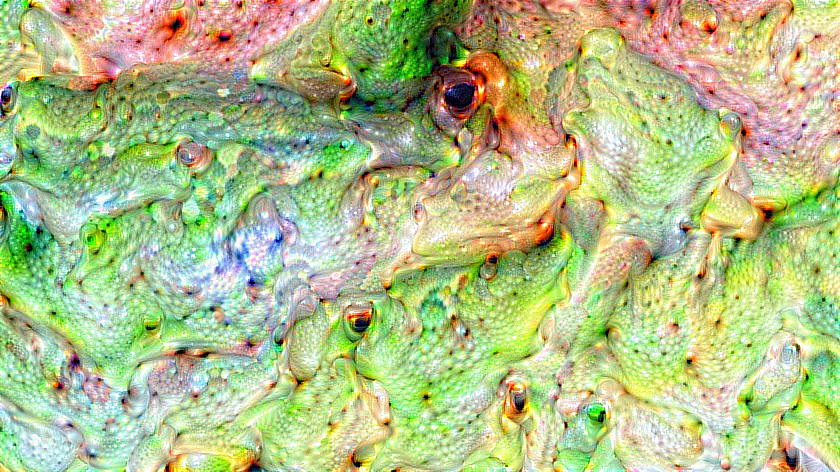

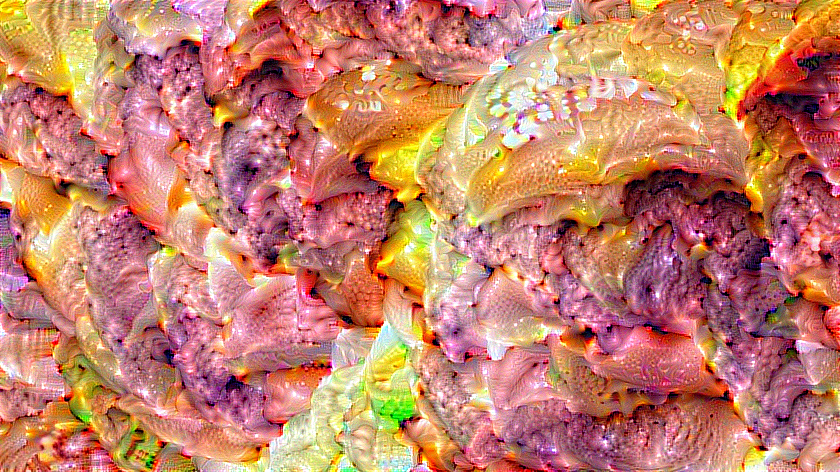

Underneath, we show some examples which have been generated by the people at twitch these past days. They might not be as impressive as those by google, but they are generated interactively in real time.

This one shows how it mainly looks. For example, with bee, it knows that bees need wings and eyes and bodies with legs, but it hasn’t really figured out complete anatomy yet. The parts need to be in there, but never mind their relative position. It gives quite nightmarish representations of what it means to be a certain object, much like 20th century deconstructionist artists.

Because spider webs need spiders. In the left of the image, 2 things are visible which look like spiders. Also note how the neural net figured out water drops, including reflection and refraction.

Tree frogs mostly looks like a horrid blob of liquid frog.

Tree frogs mostly looks like a horrid blob of liquid frog.

Same for the cheeseburger, allthough it definitely looks like something I would eat (quite hungry right now).

Same for the cheeseburger, allthough it definitely looks like something I would eat (quite hungry right now).

Same goes for pizza’s but here we start to see another aspect of the neural net, it’s mixing aspects of the environment of the object, with the object itself. Here, with pizza, you can see distorted mouths at the bottom left.

Same goes for pizza’s but here we start to see another aspect of the neural net, it’s mixing aspects of the environment of the object, with the object itself. Here, with pizza, you can see distorted mouths at the bottom left.

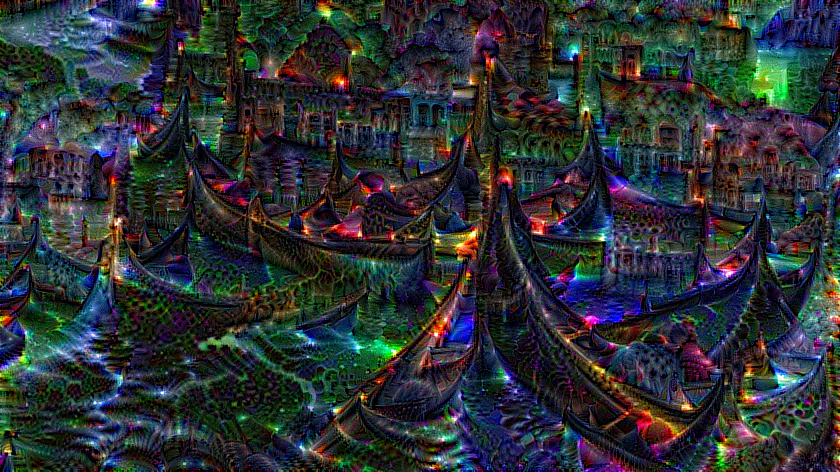

This is another example of how it mixes the surroundings to certain object. Venice is apparently an integral part of gondola’s, as can be seen in the background.

This is another example of how it mixes the surroundings to certain object. Venice is apparently an integral part of gondola’s, as can be seen in the background.

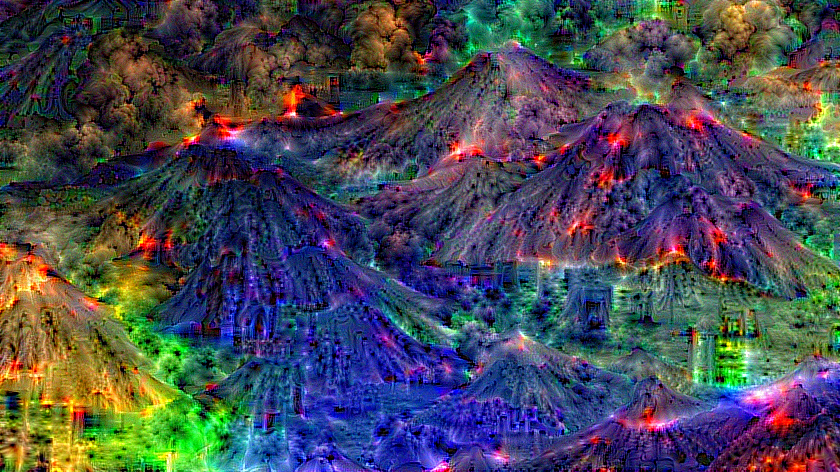

Note how with volcano, it figured out the color of the lava correctly, but not the color of the mountains.

Note how with volcano, it figured out the color of the lava correctly, but not the color of the mountains.

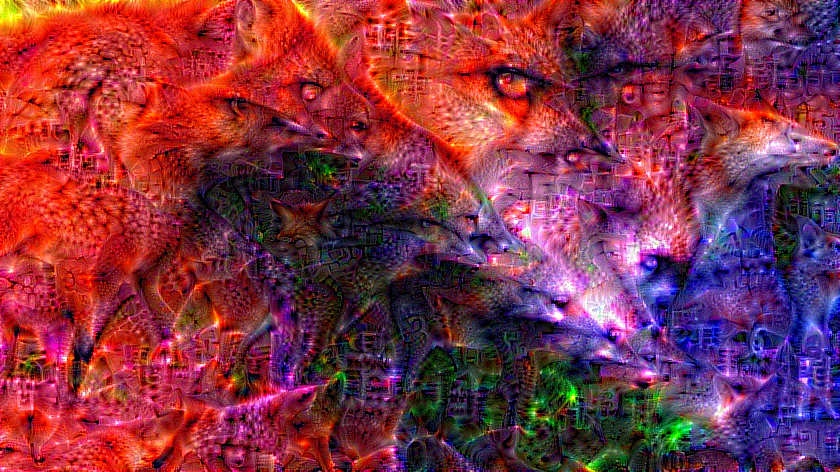

Another example which shows that it learned some colours correctly, is the fox. Foxes are ginger and live in the forest, hence this impressionistic figuration of what it means to be a fox.

Another example which shows that it learned some colours correctly, is the fox. Foxes are ginger and live in the forest, hence this impressionistic figuration of what it means to be a fox.

But broccoli is definitely green!

But broccoli is definitely green!

This is more an artsy representation which I like, of the white rabbit from Alice in Wonderland.

This is more an artsy representation which I like, of the white rabbit from Alice in Wonderland.

I love this one. The subject is bubbles but since bubbles are transparent, it also included eyes and mouths in there.

I love this one. The subject is bubbles but since bubbles are transparent, it also included eyes and mouths in there.

But go ahead, and experiment for yourself on Twitch. The network knows all words included in this list. Feel free to request them to the VJ-bot running the stream.

Edit: this was what it looked like live.

A link to the stream on twitch, where you can use the chat to request objects

The future

Last night, the guys at Google released more detailed instructions on how they did it. It appears we had some things right (we also used Gaussian models as image priors), that we did some things different (we multi-scaled the prior), and that they have ideas I can’t wait to try out!

So if you keep tuned, who knows what these neural nets will produce a couple of months from now!